On A Preference for Human Interaction Over AI Interaction and Why it Doesn't Matter

Disclaimer: This post mostly refers to productivity-related AI, but I do dive a tiny bit into the more artistic applications of some of these tools, and while I’m happy to talk at length about that area of the technology, it’s not my area of expertise and I would hate to inspire any fear in anyone because of my lack of knowledge. So I’ll try to keep this to the productivity and philosophical implications of the work.

Edit: It’s been rightly pointed out to me that some of the things I’m suggesting in this post will unevenly affect certain groups of people at a rate and degree greater than other groups. I agree with this argument and the way that it was presented, and while I still think that the larger societal change is both necessary and inevitable, I have now shifted my mindset slightly away from the more…. “apocalyptic” ideas that I present here toward finding more equitable solutions to these problems using this technology. In short, I’m asking myself, “Do we have to burn it down to build a better building, or can we replace each brick individually until the structure that remains is made of entirely new bricks?” I will leave the content of this post as-is, but I may write a revised copy in the future.

I’m a cautious optimist when it comes to technology of any kind, and I have a particular fascination with recent shifts like Web3 (don’t get me started) and AI. I’ve also said on several occasions, to varying reactions, that my professional goal is to make myself obsolete, and as a leader in a middle management position at a global company, I know that it’s just a matter of time before I’m “Solved” out of the professional equation.

But I’m still very excited about AI.

All around my office, I see people using tools like ChatGPT or Grammarly to help them with the tone of an e-mail they’re about to send, fix their Excel formulas, and ask basic factual questions that prevent them from having to sift through multiple sources on Google.

What I see, is people trying to be more effective at their work. There are arguments (I’ve made them) that tools like Grammarly will make people worse writers, but we’re already notoriously bad at conveying our sentiments and thoughts via text alone. I’ve heard people say that when ChatGPT can write Excel formulas it will replace accountants, but did the calculator replace mathematicians? It may have made the general population less math-literate, but it also helped to propel entire fields of mathematics.

I do understand (and have a tiny bit myself) the personal fear that a tool will take a person’s job, and history is full of fables like John Henry and the Steam Engine, and with AI we’re already seeing that in action at a pretty enormous level that is likely to spark long-overdue societal conversations about how we should be spending our precious little time in the modern age. But aside from this fear, there is a question we should ask whenever we implement a piece of technology that is intended to make work easier: What causes us to resist efforts to simplify people's jobs? Why do we seem to prefer maintaining difficult conditions?

There is a supercharged flurry of Capitalistic angst brewing around technologies like AI just like there was a momentary panicked land grab during the initial introduction of Web3 to corporations who really like their closed ecosystems and fear the thought of Decentralized Autonomous Organizations (DAOs), because it’s never really been the individuals who decide how this technology gets used, and history tends to teach us that better technology benefits a select few and displaces the rest. There’s also the pesky fear of change that infects us all.

To be clear, I don’t know if this will be any different, and I don’t have any arguments for why it would be. A coworker of mine recently said that people will always have a preference for interacting with a human, but that technology will get so good that this preference will become irrelevant. And I agree! My argument instead is that a massive displacement of work itself would be a good thing for everyone because it would force those societal conversations I was referring to (I’d prefer not to be first though, thanks).

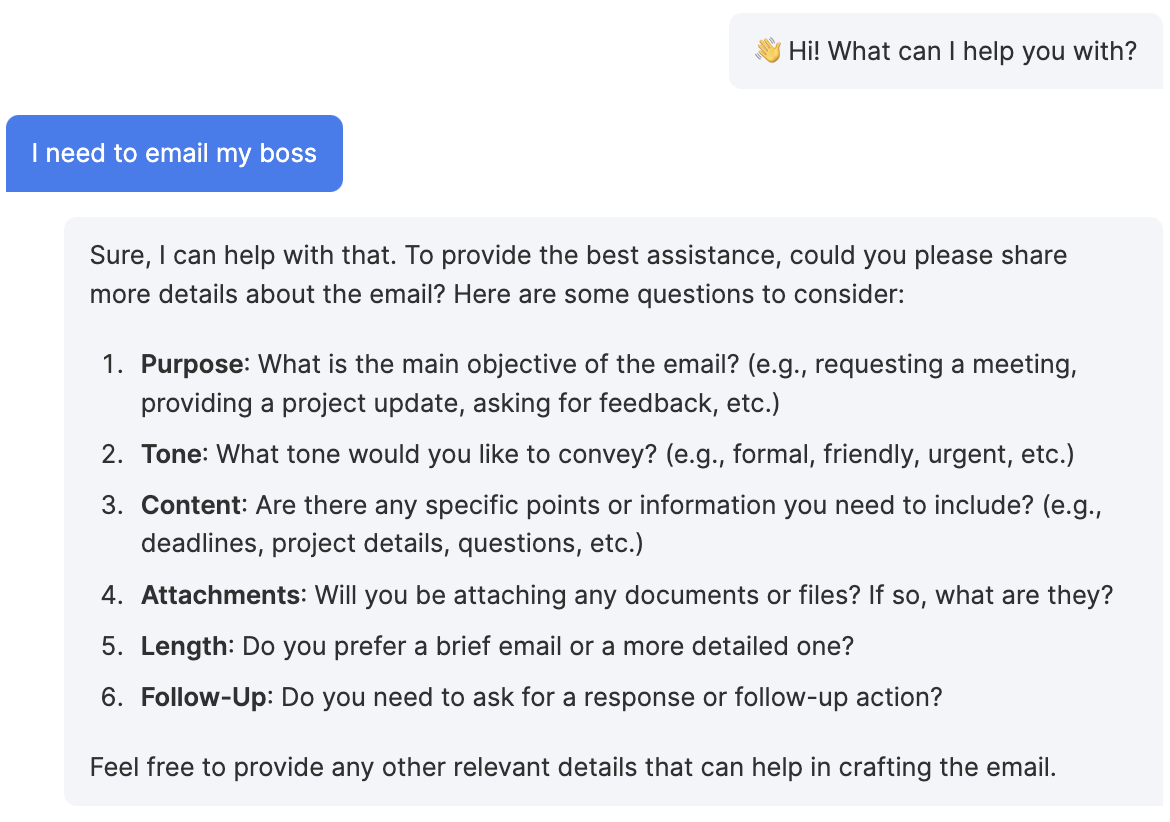

I’ve heard tech billionaires the world over make lofty promises about new careers like “Prompt Engineers,” but last night I spent a few hours creating an agent for my chatbot on my site (check it out) that does a pretty great job of improving poorly constructed prompts like “I need to write an email to my boss”. It’s not perfect, but it’s also proof that if I can spend a few hours on an idea then it’s just a matter of time before someone smarter perfects it.

Previous reply was a generic letter with the subject “Resignation Letter”

Current models are great at many things, but many argue that while they’re great at creative execution, they’ll never be good at creative ideation. The concept is that they can’t imagine a thing that doesn’t exist. But what if that’s wrong? Articles and papers come out nearly every week referring to concepts like Deep Reasoning or Neuro-Symbolism, and new multi-agent approaches to interfaces encourage AI inner monologues.

So, what is “Safe?”

Over coffee today, I had the opportunity to talk with a friend who is a dancer for the Dutch National Ballet. We discussed the value of human creativity using his art form as an example. There are dozens of people who pay to see the same show, often multiple times per week, year after year, in order to see the craft of ballet. No show is the same. No dancer’s performance is ever the same. In fact, some of the allure, he says, is when something goes wrong you want to see how the dancer corrects or improvises.

And there are many things like this. There may come a day when there is an all-robot ballet, and it will undoubtedly be astounding. There may be an orchestra that is capable of recreating the original artist’s exact sound, but it’s crafts like this where we don’t pay for perfection. We value them because we see, in front of us, the effort and dedication that it’s taken in order to perform something that is physically improbable. The practice of getting good at something does have some sort of value over the result of the thing. It’s the same reason why some of our most treasured singers have great voices, but we look down on artists who use autotune as a crutch.

For me, there is hope, not only that a massive foundational disruption will be good but also that there will still be durable forms of expression where human creativity is the ideal.

I hope this was useful.